01. question

In the highly competitive food industry, accurate decision-making is crucial for success. This is particularly relevant to our client in this case study, a vertically integrated food processing company. As a producer of meat and ready-to-eat products based out of East Flanders, they supply not only their own network of points of sale, but also external customers. Effective forecasting plays a vital role in the company’s operational success. For this purpose, they use two distinct key forecasting models:

- Delivery forecasting model. This is designed to make long-term predictions about demand, which helps with planning production and inventory management. It is written in Python code and uses a versatile SARIMAX statistical model, which incorporates delivery data from the production site along with additional input on external factors (such as holidays and weather forecasts). For baseline comparison, a moving window model is used to establish a standard growth factor for the upcoming year.

- Retail demand forecasting model. This model is aimed at predicting sales at individual retail locations, using cash register data. It operates through decision-tree random forest modelling. For this model to produce accurate results, significant improvements were needed in both data quality and code structure.

The continuous refinement of these forecasting models, coupled with other business intelligence initiatives at the company, requires specialised expertise. This is where our Addestino data scientist, Francis, and data analyst, Michiel, come in. Embedded within our client’s Data & Analytics team, they lend their expertise to enhance the company’s data modelling efforts.

02. process

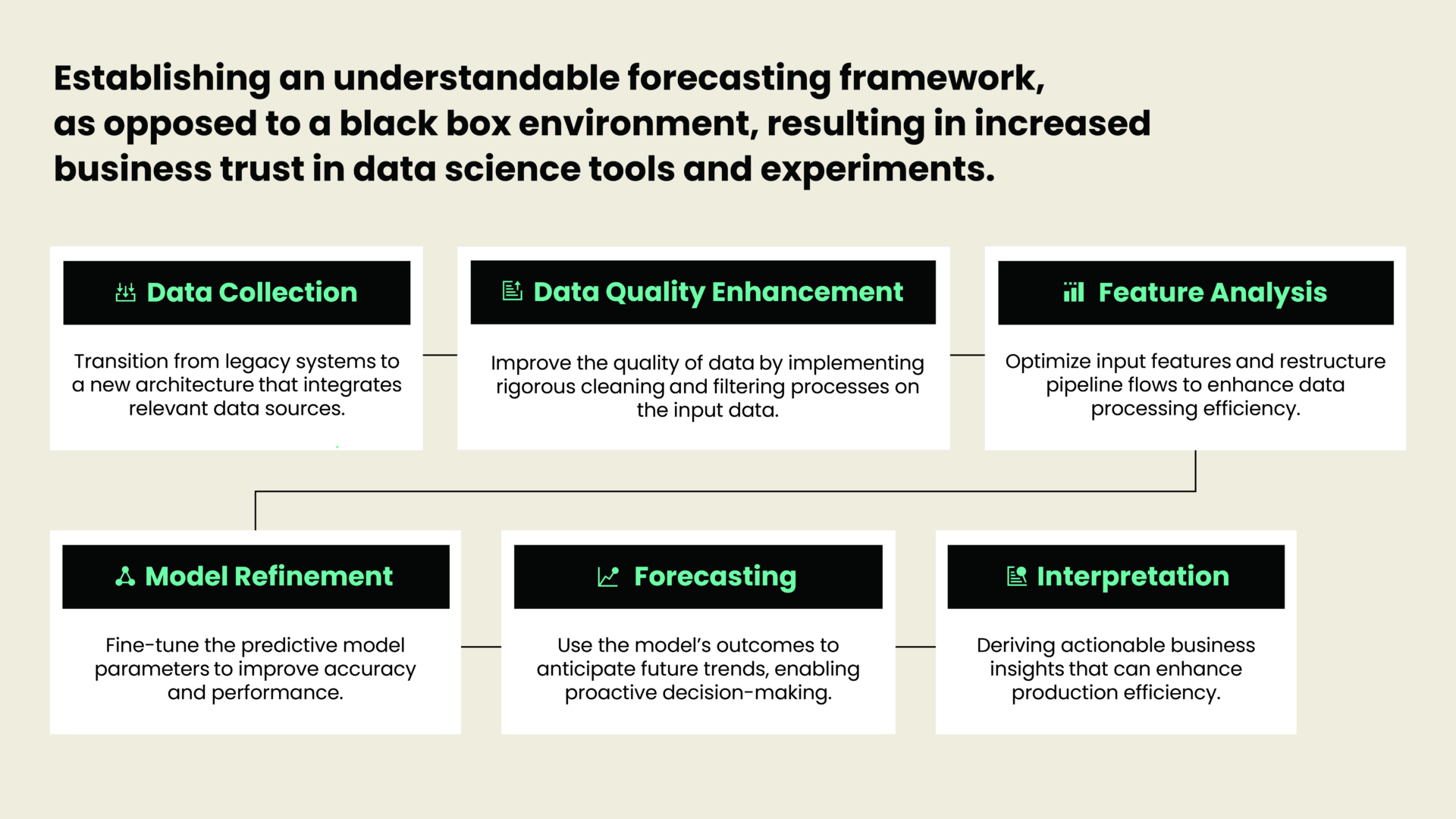

The role of our embedded consultant Francis is to improve the accuracy and reliability of both the delivery and retail forecasting models and supervise their integration and maintenance. In addition, he works on close collaboration with our client’s data team to tackle several additional business intelligence challenges. Next to data quality optimisation, these efforts include:

- Development of cross-selling and market basket analysis. This involves predicting the probability that buying a certain product will trigger the purchase of another, related item.

- Feedback on new, innovative data science projects that are integrated into the client’s broader IT framework. This includes exploring new ways to leverage existing and untapped data streams to create new use cases and resulting value across the organisation.

03. insights

Refining modelling approaches is key to this kind of project. Meticulous adjustments and continuous improvement allow for more accurate forecasting, both from the central production facility to retail points and back. For example, the early-stage retail forecasting model now runs on a new environment with optimised data quality, setting the stage for new forecasting experiments with varying input parameters. Meanwhile, the delivery forecasting model is constantly being updated, with a migration to a new environment underway. Experience with Microsoft’s Azure cloud platform has been crucial in this endeavour. A few examples:

- Transitioning on-premise data to Azure Storage

- Utilising Azure Data Factory as an orchestrator for modelling follow-ups.

Employing Azure Batch to run various models as batch jobs.

04. results

The updated models and resulting long-term strategy now serve as a foundation for action plans and the introduction of new features into the forecasting models. By adopting a new roadmap, we’ve laid the foundation for a unified approach to data science.

Additionally, our consultants have been instrumental in offering deeper insights into existing forecasting models and their capabilities across different teams and business units at the client. This has improved overall understanding and support for the models, while also facilitating the exploration of new features and potential use cases.

Want to know more about this case?